%load_ext autoreload

%autoreload 2Link to the source code: https://github.com/yanruoz/yanruoz.github.io/blob/main/posts/linear_reg/linear_reg.py

Implement Linear Regression Two Ways

# import packages

import numpy as np

from matplotlib import pyplot as plt

from linear_reg import LinearRegression

from sklearn.linear_model import Lasso# generate dataset

np.random.seed(1234)

def pad(X):

return np.append(X, np.ones((X.shape[0], 1)), 1)

def LR_data(n_train = 100, n_val = 100, p_features = 1, noise = .1, w = None):

if w is None:

w = np.random.rand(p_features + 1) + .2

X_train = np.random.rand(n_train, p_features)

y_train = pad(X_train)@w + noise*np.random.randn(n_train)

X_val = np.random.rand(n_val, p_features)

y_val = pad(X_val)@w + noise*np.random.randn(n_val)

return X_train, y_train, X_val, y_val# plot dataset

# set variables and parameters

np.random.seed(1234)

n_train = 100

n_val = 100

p_features = 1

noise = 0.2

# create some data

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

# plot it

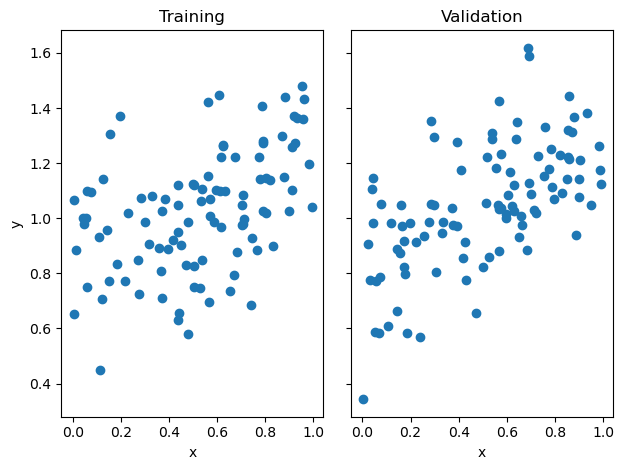

fig, axarr = plt.subplots(1, 2, sharex = True, sharey = True)

axarr[0].scatter(X_train, y_train)

axarr[1].scatter(X_val, y_val)

labs = axarr[0].set(title = "Training", xlabel = "x", ylabel = "y")

labs = axarr[1].set(title = "Validation", xlabel = "x")

plt.tight_layout()

After obtaining the dataset, we firstly fit the model with the anlytic method and then inspect the training score, the validation score, and the weight.

# analytic method

np.random.seed(1234)

LR = LinearRegression()

LR.fit(X_train, y_train) # I used the analytical formula as my default fit method

print(f"Training score = {LR.score(X_train, y_train).round(4)}")

print(f"Validation score = {LR.score(X_val, y_val).round(4)}")

LR.wTraining score = 0.2117

Validation score = 0.3233array([0.36147991, 0.83389459])Then, we fit the model with the regular gradient and inspect the weight. We can see that the optimized weights of the two methods are quite close.

# regular gradient

np.random.seed(1234)

LR_reg = LinearRegression()

LR_reg.fit_gradient(X_train, y_train, alpha = 0.001, max_epochs = 1000)

LR_reg.warray([0.34744356, 0.84172573])Lastly, we implement the stochastic gradient and still get a similar final weight.

# stochastic gradient

np.random.seed(1234)

LR_sto = LinearRegression()

LR_sto.fit(X_train, y_train, method = 'gradient', alpha = 0.001, m_epochs = 1000)

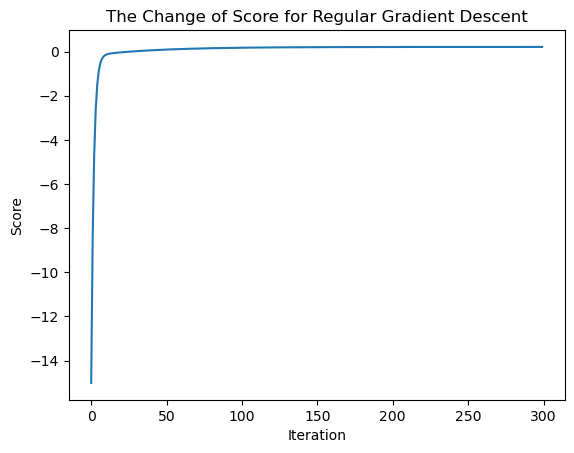

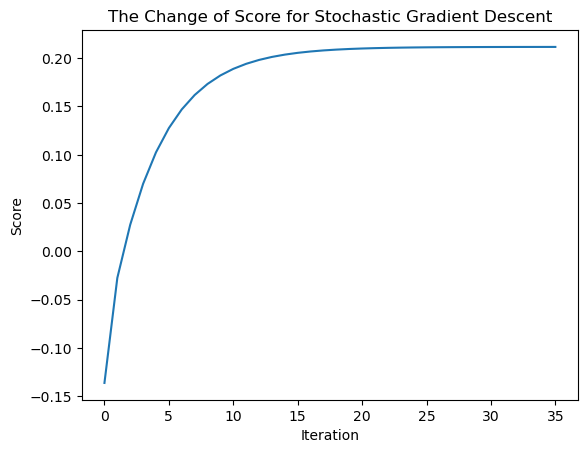

LR_sto.warray([0.35754189, 0.83609168])In the following graphs, we see how the score changed over time for the regular and the stochastic gradient descent respectively.

plt.plot(LR_reg.score_history)

labels = plt.gca().set(xlabel = "Iteration", ylabel = "Score")

plt.title("The Change of Score for Regular Gradient Descent")Text(0.5, 1.0, 'The Change of Score for Regular Gradient Descent')

plt.plot(LR_sto.score_history)

labels = plt.gca().set(xlabel = "Iteration", ylabel = "Score")

plt.title("The Change of Score for Stochastic Gradient Descent")Text(0.5, 1.0, 'The Change of Score for Stochastic Gradient Descent')

Experiments

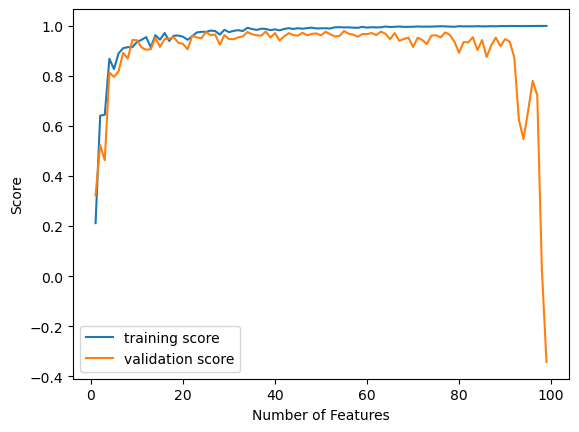

In the experiment below, we plot the change in the training and validation scores as the number of features increases and the number of training data points remains constant (100). We see that initially, the increase in feature number leads to a higher score overall. However, as the feature number increases to around 90, the score decreases sharply. As it approaches the number of training points, despite fluctuations, it follows a decreasing trend to negative numbers, indicating poor modeling. This phenomenon illustrates that overfitting might lead to worse performance.

# create n_train and a list of p_features

np.random.seed(1234)

n_train = 100

n_val = 100

p_features_list = np.arange(1, n_train, 1).tolist() # step=1

# print(p_features_list)

noise = 0.2

# create empty lists to store training and validation scores

training_score_list = []

validation_score_list = []

for p_features in p_features_list:

# create some data

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

# fit the model

LR = LinearRegression()

LR.fit(X_train, y_train)

# compute and append scores

training_score = LR.score(X_train, y_train).round(4)

validation_score = LR.score(X_val, y_val).round(4)

training_score_list.append(training_score)

validation_score_list.append(validation_score)

# plot

plt.plot(p_features_list, training_score_list, label = "training score")

plt.plot(p_features_list, validation_score_list, label = "validation score")

plt.xlabel("Number of Features")

plt.ylabel("Score")

plt.legend(loc='best')

plt.show<function matplotlib.pyplot.show(close=None, block=None)>

LASSO Regularization

Implementation

# use lasso regularization

L = Lasso(alpha = 0.001)

# fit the model on data

p_features = n_train - 1

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

L.fit(X_train, y_train)Lasso(alpha=0.001)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Lasso(alpha=0.001)

# compute the score

L.score(X_val, y_val)0.7806576461432739Experiments

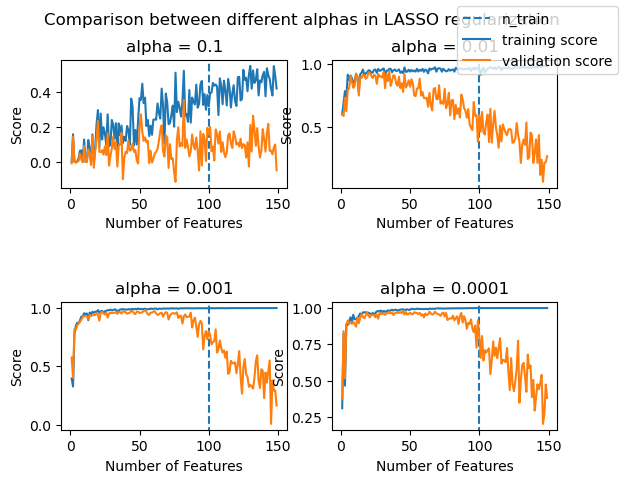

We implement the same experiments as previously did with linear regression, increasing the number of features up to or even past n_train - 1 (In this case, n_train=100, and I increased p_features to 150). The plots show the change of score as the number of features increases, with different values for the regularization strenth alpha in each subplot.

# create n_train and a list of p_features

np.random.seed(1234)

n_train = 100

n_val = 100

p_features_list = np.arange(1, n_train + 50, 1).tolist()

# print(p_features_list)

noise = 0.2

# set subplot parameters

fig, axarr = plt.subplots(2, 2)

fig.suptitle('Comparison between different alphas in LASSO regularization')

plt.rcParams["figure.figsize"] = (10, 4)

# initiate alpha list & axis array list

alpha_list = [0.1, 0.01, 0.001, 0.0001]

axarr_list = [(0,0), (0,1), (1,0), (1,1)]

# draw subplots

for i in range(4):

# create empty lists to store training and validation scores

training_score_list = []

validation_score_list = []

for p_features in p_features_list:

# create data

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

# fit the model

L = Lasso(alpha = alpha_list[i])

L.fit(X_train, y_train)

# compute and append scores

training_score = L.score(X_train, y_train).round(4)

validation_score = L.score(X_val, y_val).round(4)

training_score_list.append(training_score)

validation_score_list.append(validation_score)

# label, axis, and plot

axarr[axarr_list[i]].set_title("alpha = " + str(alpha_list[i]))

axarr[axarr_list[i]].set(xlabel = "Number of Features", ylabel = "Score")

l1 = axarr[axarr_list[i]].axvline(x = n_train, ymin = -0.3, ymax = 1.1, linestyle = 'dashed', label = "n_train")

l2 = axarr[axarr_list[i]].plot(p_features_list, training_score_list, label = "training score")

l3 = axarr[axarr_list[i]].plot(p_features_list, validation_score_list, label = "validation score")

labels = ["n_train", "training score", "validation score"]

fig.legend([l1, l2, l3], labels=labels, loc="upper right")

plt.subplots_adjust(hspace=.9) /Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 5.808e-02, tolerance: 4.225e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.038e-01, tolerance: 5.049e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.498e-01, tolerance: 7.947e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 6.667e-02, tolerance: 6.139e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 7.698e-02, tolerance: 6.218e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 7.221e-02, tolerance: 7.196e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 9.136e-02, tolerance: 7.467e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 8.402e-02, tolerance: 6.940e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.031e-01, tolerance: 4.042e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.051e-01, tolerance: 4.241e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 5.186e-02, tolerance: 4.265e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.031e-01, tolerance: 3.871e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.212e-01, tolerance: 5.579e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 8.007e-02, tolerance: 5.079e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.599e-01, tolerance: 6.885e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.133e-01, tolerance: 3.400e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 8.964e-02, tolerance: 5.205e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.317e-01, tolerance: 4.415e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.260e-01, tolerance: 4.767e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.183e-01, tolerance: 6.540e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.009e-01, tolerance: 4.112e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.840e-01, tolerance: 5.782e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.021e-01, tolerance: 5.594e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.103e-01, tolerance: 4.865e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 9.373e-02, tolerance: 6.901e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 9.792e-02, tolerance: 6.280e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.163e-01, tolerance: 6.635e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.588e-01, tolerance: 6.772e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.419e-01, tolerance: 5.226e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.684e-01, tolerance: 6.127e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.319e-01, tolerance: 4.500e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.237e-01, tolerance: 6.582e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.361e-01, tolerance: 5.225e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.346e-01, tolerance: 5.854e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.944e-01, tolerance: 7.062e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.271e-01, tolerance: 7.026e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.180e-01, tolerance: 5.210e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.399e-01, tolerance: 7.281e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.521e-01, tolerance: 6.080e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.527e-01, tolerance: 7.766e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.611e-01, tolerance: 7.308e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.520e-01, tolerance: 6.846e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.784e-01, tolerance: 5.883e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.946e-01, tolerance: 5.677e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.806e-01, tolerance: 7.813e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.046e-01, tolerance: 5.827e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 3.180e-01, tolerance: 7.432e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.051e-01, tolerance: 6.311e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.625e-01, tolerance: 5.379e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.354e-01, tolerance: 6.337e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.906e-01, tolerance: 6.464e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.312e-01, tolerance: 6.317e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.512e-01, tolerance: 6.583e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.037e-01, tolerance: 6.413e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.982e-01, tolerance: 6.332e-02

model = cd_fast.enet_coordinate_descent(

/Applications/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:634: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 2.149e-01, tolerance: 7.122e-02

model = cd_fast.enet_coordinate_descent(

/var/folders/d7/1kbvm4bx4213x2pp7svzg_p40000gp/T/ipykernel_53956/1696307001.py:49: UserWarning: You have mixed positional and keyword arguments, some input may be discarded.

fig.legend([l1, l2, l3], labels=labels, loc="upper right")

As alpha becomes smaller, the model tends to reach a better score and reach it more quickly. The score is also significantly higher compared to the linear regression. However, the effect of overfitting remains when the number of features is too large.